The incredible success of BERT in Natural Language Processing (NLP) showed that large models trained on unlabeled data are able to learn powerful representations of language. These representations have been shown to encode information about syntax and semantics. In this blog post we ask the question: Can similar methods be applied to biological sequences, specifically proteins? If so, to what degree do they improve performance on protein prediction problems that are relevant to biologists?

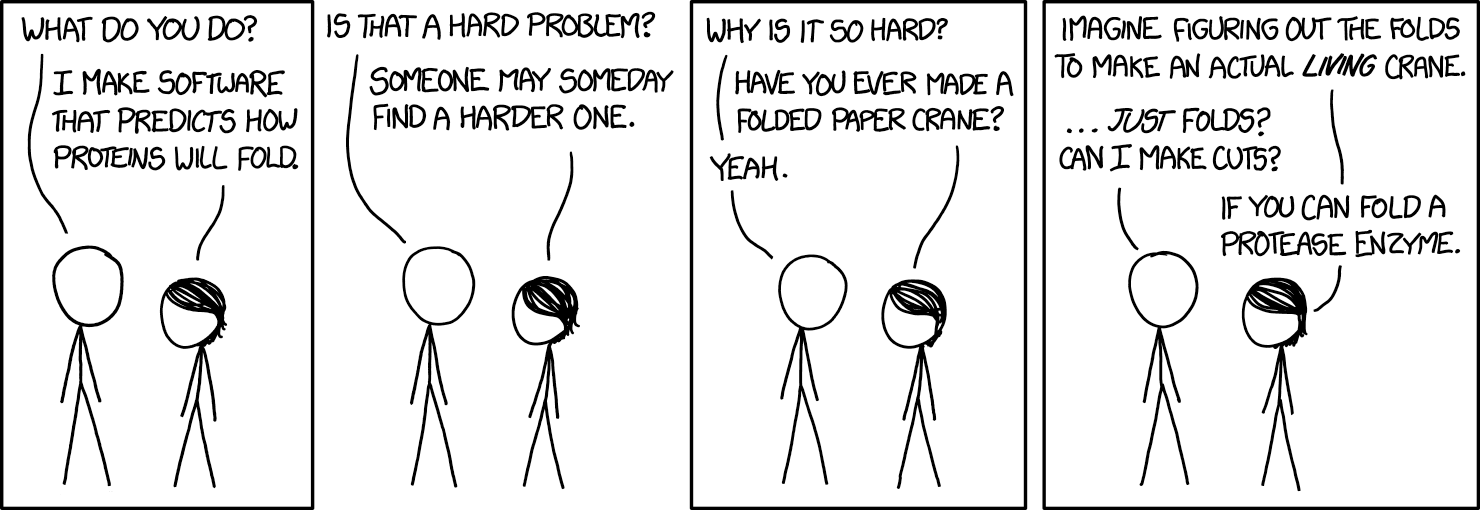

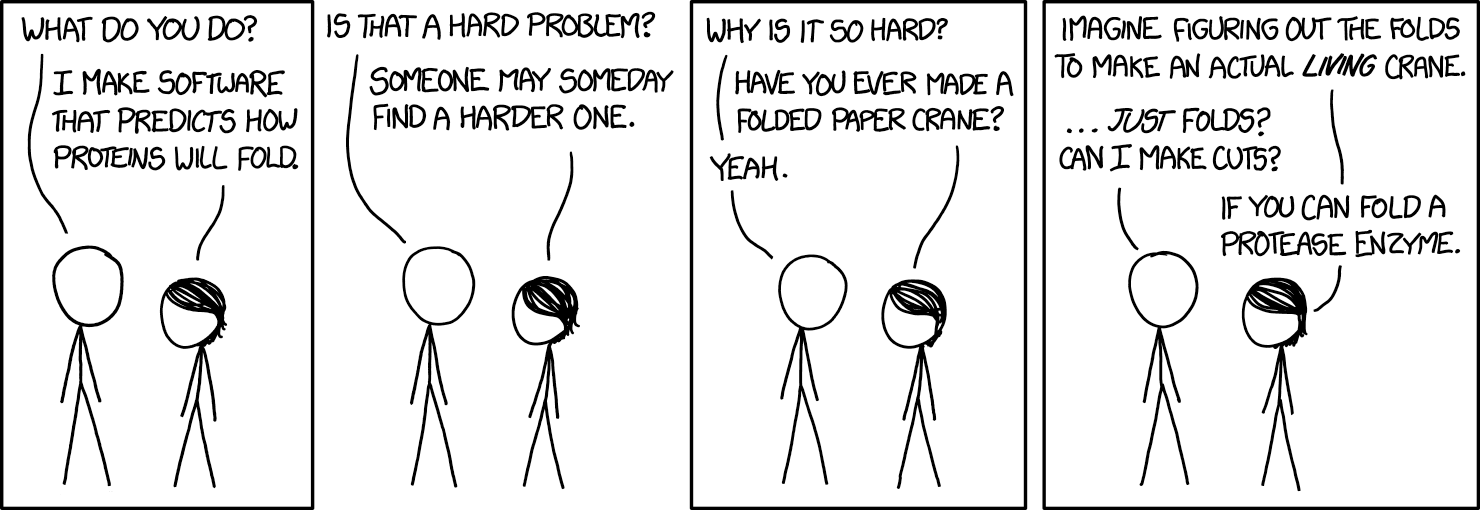

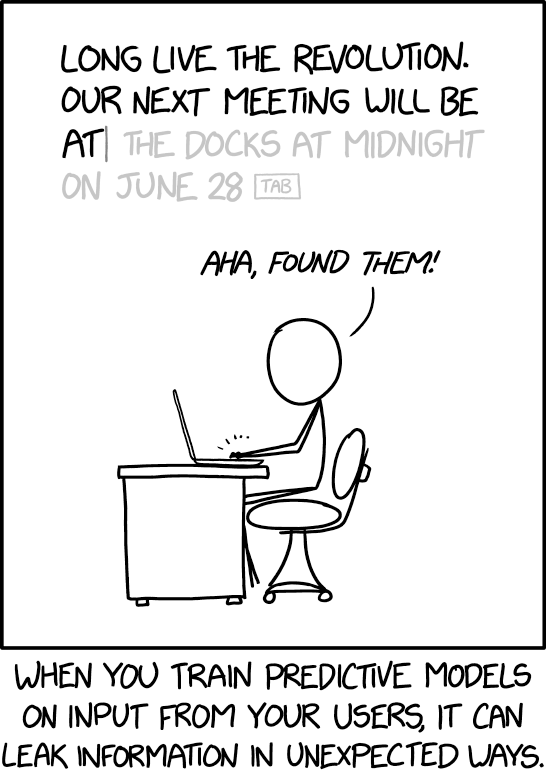

We discuss our recent work on TAPE: Tasks Assessing Protein Embeddings (preprint) (github), a benchmarking suite for protein representations learned by various neural architectures and self-supervised losses. We also discuss the challenges that proteins present to the ML community, previously described by xkcd:

Continue

When learning to follow natural language instructions, neural networks tend to

be very data hungry – they require a huge number of examples pairing language

with actions in order to learn effectively. This post is about reducing those

heavy data requirements by first watching actions in the environment before

moving on to learning from language data. Inspired by the idea that it is

easier to map language to meanings that have already been formed, we introduce

a semi-supervised approach that aims to separate the formation of abstractions

from the learning of language. Empirically, we find that pre-learning of

patterns in the environment can help us learn grounded language with much less

data.

Continue

AI agents have learned to play Dota, StarCraft, and Go, by training to beat an

automated system that increases in difficulty as the agent gains skill at the

game: in vanilla self-play, the AI agent plays games against itself, while in

population-based training, each agent must play against a population of other

agents, and the entire population learns to play the game.

This technique has a lot going for it. There is a natural curriculum in

difficulty: as the agent improves, the task it faces gets harder, which leads

to efficient learning. It doesn’t require any manual design of opponents, or

handcrafted features of the environment. And most notably, in all of the games

above, the resulting agents have beaten human champions.

The technique has also been used in collaborative settings: OpenAI had one

public match where each team was composed of three OpenAI Five agents alongside

two human experts, and the For The Win (FTW) agents trained to play Quake were

paired with both humans and other agents during evaluation. In the Quake

case, humans rated the FTW agents as more collaborative than fellow humans

in a participant survey.

Continue

In this blog post, we explore a functional paradigm for implementing

reinforcement learning

(RL) algorithms. The paradigm will be that developers write the numerics of

their algorithm as independent, pure functions, and then use a library to

compile them into policies that can be trained at scale. We share how these

ideas were implemented in RLlib’s policy builder

API,

eliminating thousands of lines of “glue” code and bringing support for

Keras

and TensorFlow

2.0.

Continue

Figure 1: Our approach (PDDM) can efficiently and effectively learn complex

dexterous manipulation skills in both simulation and the real world. Here, the

learned model is able to control the 24-DoF Shadow Hand to rotate two

free-floating Baoding balls in the palm, using just 4 hours of real-world data

with no prior knowledge/assumptions of system or environment dynamics.

Dexterous manipulation with multi-fingered hands is a grand challenge in

robotics: the versatility of the human hand is as yet unrivaled by the

capabilities of robotic systems, and bridging this gap will enable more general

and capable robots. Although some real-world tasks (like picking up a

television remote or a screwdriver) can be accomplished with simple parallel

jaw grippers, there are countless tasks (like functionally using the remote to

change the channel or using the screwdriver to screw in a nail) in which

dexterity enabled by redundant degrees of freedom is critical. In fact,

dexterous manipulation is defined as being object-centric, with the goal

of controlling object movement through precise control of forces and motions

— something that is not possible without the ability to simultaneously impact

the object from multiple directions. For example, using only two fingers to

attempt common tasks such as opening the lid of a jar or hitting a nail with a

hammer would quickly encounter the challenges of slippage, complex contact

forces, and underactuation. Although dexterous multi-fingered hands can indeed

enable flexibility and success of a wide range of manipulation skills, many of

these more complex behaviors are also notoriously difficult to control: They

require finely balancing contact forces, breaking and reestablishing contacts

repeatedly, and maintaining control of unactuated objects. Success in such

settings requires a sufficiently dexterous hand, as well as an intelligent

policy that can endow such a hand with the appropriate control strategy. We

study precisely this in our work on Deep Dynamics Models for Learning Dexterous

Manipulation.

Continue

Kourosh Hakhamaneshi

Sep 26, 2019

In this post, we share some recent promising results regarding the applications

of Deep Learning in analog IC design. While this work targets a specific

application, the proposed methods can be used in other black box optimization

problems where the environment lacks a cheap/fast evaluation procedure.

So let’s break down how the analog IC design process is usually done, and then

how we incorporated deep learning to ease the flow.

Continue

Adam Stooke

Sep 24, 2019

UPDATE (15 Feb 2020): Documentation is now available for rlpyt! See it at

rlpyt.readthedocs.io. It describes program flow, code organization, and

implementation details, including class, method, and function references for

all components. The code examples still introduce ways to run experiments, and

now the documentation is a more in-depth resource for researchers and

developers building new ideas with rlpyt.

Since the advent of deep reinforcement learning for game play in 2013, and

simulated robotic control shortly after, a multitude of new algorithms

have flourished. Most of these are model-free algorithms which can be

categorized into three families: deep Q-learning, policy gradients, and Q-value

policy gradients. Because they rely on different learning paradigms, and

because they address different (but overlapping) control problems,

distinguished by discrete versus continuous action sets, these three families

have developed along separate lines of research. Currently, very few if any

code bases incorporate all three kinds of algorithms, and many of the original

implementations remain unreleased. As a result, practitioners often must

develop from different starting points and potentially learn a new code base

for each algorithm of interest or baseline comparison. RL researchers must

invest time reimplementing algorithms–a valuable individual exercise but one

which incurs redundant effort across the community, or worse, one that presents

a barrier to entry.

Yet these algorithms share a great depth of common reinforcement learning

machinery. We are pleased to share rlpyt, which leverages this commonality

to offer all three algorithm families built on a shared, optimized

infrastructure, in one repository. Available from BAIR at

https://github.com/astooke/rlpyt, it contains modular implementations of

many common deep RL algorithms in Python using Pytorch, a leading deep learning

library. Among numerous existing implementations, rlpyt is a more

comprehensive open-source resource for researchers.

Continue

We introduce Bit-Swap, a scalable and effective lossless data compression

technique based on deep learning. It extends previous work on practical

compression with latent variable models, based on bits-back coding and

asymmetric numeral systems. In our experiments Bit-Swap is able to beat

benchmark compressors on a highly diverse collection of images. We’re releasing

code for the method and optimized models such that people can explore and

advance this line of modern compression ideas. We also release a demo and

a pre-trained model for Bit-Swap image compression and decompression on your

own image. See the end of the post for a talk that covers how bits-back coding

and Bit-Swap works.

Continue

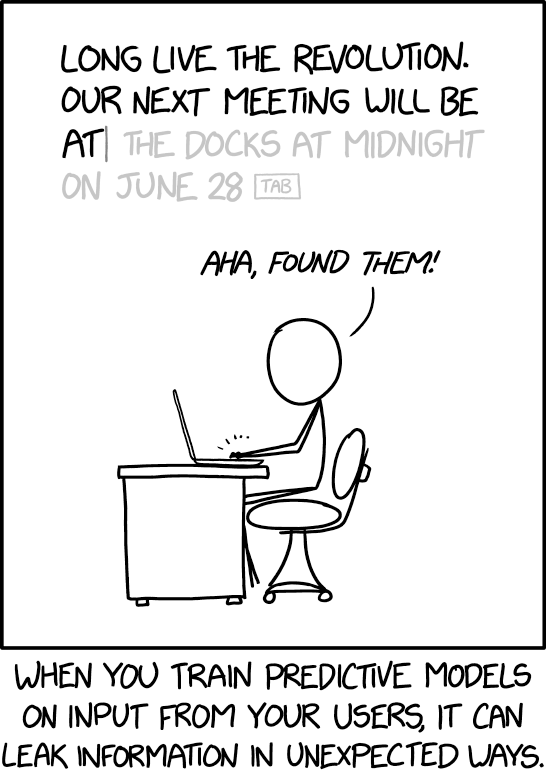

Nicholas Carlini

Aug 13, 2019

It is important whenever designing new technologies to ask “how will this

affect people’s privacy?” This topic is especially important with regard to

machine learning, where machine learning models are often trained on sensitive

user data and then released to the public. For example, in the last few years

we have seen models trained on users’ private emails, text

messages,

and medical records.

This article covers two aspects of our upcoming USENIX Security

paper that investigates to what extent

neural networks memorize rare and unique aspects of their training data.

Specifically, we quantitatively study to what extent following

problem actually occurs in practice:

Continue

To operate successfully in a complex and changing environment, learning agents must be able to acquire new skills quickly.

Humans display remarkable

skill in this area — we can learn to recognize a new object from one example,

adapt to driving a different car in a matter of minutes, and add a new slang

word to our vocabulary after hearing it once. Meta-learning is a promising

approach for enabling such capabilities in machines. In this paradigm, the

agent adapts to a new task from limited data by leveraging a wealth of

experience collected in performing related tasks. For agents that must take

actions and collect their own experience, meta-reinforcement learning (meta-RL)

holds the promise of enabling fast adaptation to new scenarios. Unfortunately,

while the trained policy can adapt quickly to new tasks, the meta-training

process requires large amounts of data from a range of training tasks,

exacerbating the sample inefficiency that plagues RL algorithms. As a result,

existing meta-RL algorithms are largely feasible only in simulated

environments. In this post, we’ll briefly survey the current landscape of

meta-RL and then introduce a new algorithm called PEARL that drastically

improves sample efficiency by orders of magnitude. (Check out the research paper and the code.)

Continue